Software that can translate spoken English into spoken Chinese almost instantly has been demonstrated by Microsoft.

The software preserves intonation and cadence so the translated speech still sounds like the original speaker.

Microsoft said research breakthroughs had reduced the number of errors made by the instant translation system.

It said it modelled the system on the way brains work to improve its accuracy.

Details about the project were given by Microsoft research boss Rick Rashid in a blogpost following a presentation he gave in Tianjin, China, in late October that had, he said, started to “generate a bit of attention”.

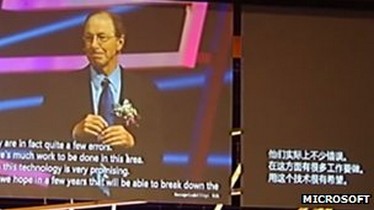

In the final few minutes of that presentation the words of Mr Rashid were almost instantly turned into Chinese by piping the spoken English through Microsoft’s translation system. In addition, the machine-generated version of his words maintained some of his spoken style.

‘Dramatic change’

This translation became possible, he said, thanks to research done in Microsoft labs that built on earlier breakthroughs.

That earlier work ditched the pattern matching approach of the first speech translation systems in favour of statistical models that did a better job of capturing the range of human vocal ability.

Improvements in computer technology that can crunch data faster had improved this further but error rates were still running at about 20-25%, he said.

In 2010, wrote Mr Rashid, Microsoft researchers working with scientists at the University of Toronto improved translation further using deep neural networks that learn to recognise sound in much the same way as brains do.

Applying this technology to speech translation cut error rates to about 15%, said Mr Rashid, calling the improvement a “dramatic change”. As the networks were trained for longer error rates were likely to fall further, he said.

The improved speech recognition system was used by Mr Rashid during his presentation. First, the audio of his speech was translated into English text. Next this was converted into Chinese and the words reordered so they made sense. Finally, the Chinese characters were piped through a text-to-speech system to emerge sounding like Mr Rashid.

“Of course, there are still likely to be errors in both the English text and the translation into Chinese, and the results can sometimes be humorous,” said Mr Rashid in the blogpost. “Still, the technology has developed to be quite useful.”

Many different technology companies, including AT&T and Google, have similar projects under way that are attempting to do simultaneous translation. NTT Docomo has shown off a smartphone app that lets Japanese people call foreigners and lets both speak in their native tongue.